In the retail industry, manufacturers are accelerating the growth of their direct-to-consumer businesses; retailers are maturing private brand programs; and consumers expect personalized products and experiences as well as full transparency to responsible sourcing and sustainable practices. COVID-19 has also exposed the risks of traditional product creation models and long product development cycles.

Now in its fourth year, the 2021 3DRC Grand Challenge: 3D Virtualization for Retailers and Brands invited the academic and research communities to present creative research and solutions with the potential to transform the way companies create, make, and sell new products by harnessing the power of digital product creation.

This year’s entries were asked to address a key question: how can we build and advance an industry-wide capability to develop product virtually and collaboratively across the product creation lifecycle?

Among the many creative ideas we have received, we are proud to announce this year’s winners:

- Industry Winner: Vital Mechanics

- Academic Institution Winners: Universidad Rey Juan Carlos and the University of Florence

Universidad Rey Juan Carlos: A Machine Learning Solution to Virtual Try-on

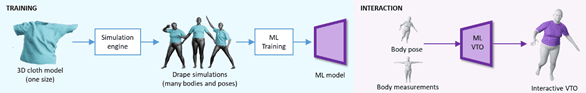

Online shopping is becoming convenient and prevalent in our lives, as it lets people shop for outfits anywhere at any time. However, unlike in-store shopping, online shopping does not allow us to try outfits in order to pick the best style and size. Virtual try-on (VTO) could be the solution to transform online shopping, providing customers with a trustworthy assessment of cloth fitting. During an online shopping experience when buyers browse the product website or click on an image, it will launch a VTO app that will automatically play an animation of the avatar wearing the outfit. Buyers can check the style and drape in motion, change the camera and lighting, and decide if the clothes fit their styles. However, this VTO experience has its own challenges; it requires real-time animation of the outfit’s drape on avatars of arbitrary sizes.

To address the challenge, Universidad Rey Juan Carlos developed a machine-learning solution for cloth animation that makes this VTO experience possible. Given a 3D model of the outfit, a preprocessing step computes multiple drapes of the outfit on 3D avatars with different body shapes and poses. With this pre-processed data, a machine-learning algorithm can quickly train an animation model. As a result, when the VTO app is launched, this fast animation model is used for rendering dynamic animations on customer avatars of arbitrary body shape.

Image source: Universidad Rey Juan Carlos

Currently as a prototype, this machine-learning VTO solution has been tested on a variety of garments and outfits and licensed to SEDDI for commercial use. Potential applications could include handling of full-detail garments; high-quality rendering of garment appearance; personalized rendering of avatar appearance; and connectivity to 3D garment design tools for data generation.

For more information, contact:

- Miguel A. Otaduy, Prof. of Computer Science (Universidad Rey Juan Carlos), [email protected]

- Alan Murray, VP of Product (SEDDI), [email protected]

University of Florence: Tactile Display for Online Shopping

What if you could open your online shopping app, wear a smart glove, and browse through the retailer’s items, not only to visualize them, but also to feel their softness and texture as if you were in a real shop? Current augmented reality shopping apps can help you see what a piece of merchandise looks like. However, how would your shopping experience change if you could also use your sense of touch?

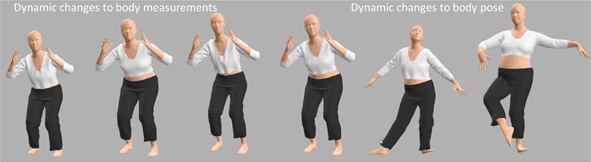

The novel wearable tactile display technology that we have just created enables the generation of tactile stimuli of softness to the fingertips, in a simple and cost-effective way. It consists of compact and low-cost tactile displays that can be worn on fingertips, and are driven by a lightweight (portable) low-cost pneumatic unit, controlled by external software.

This kind of system could allow users—for the first time—to touch and feel, rather than just visualize virtual products on a screen, thereby making the online shopping experience truly realistic.

Developing augmented/virtual reality systems that can provide customers with tactile feedback (especially of softness) has a disruptive potential, in order to make the experience maximally immersive and realistic. Indeed, customers could use the sense of touch to feel the compliance of products. An increased engagement in the augmented/virtual reality experience is expected to increase the user retention and satisfaction, with a beneficial impact on sales, purchase frequency and range of items sold.

The University of Florence presented the tactile display technology that offers a wearable and affordable solution. It is based on fingertip-mounted, pneumatically-driven tactile displays, consisting of a plastic chamber where pressurized air deforms an elastomeric membrane, which presses on the finger pulp. The driving pneumatic unit can be controlled by any personal computer and has a weight of 380 g (not optimized), making the technology easily portable.

The new wearable tactile display technology developed by the University of Florence (adapted from G. Frediani, F. Carpi, “Tactile display of softness on fingertip”, Scientific Reports, Vol. 10, 20491, 2020). Image Source: University of Florence

For more information, contact:

- Gabriele Frediani, [email protected]

- Federico Carpi, [email protected]

Vital Mechanics: Soft Avatars for Accurate Fit Prediction

Accurate fit prediction requires trustworthy avatars that behave the way humans do: they must be soft, based on data measured from real humans, and respond realistically to contact with garments. The low fidelity of current fit technologies is a fundamental roadblock to the digital transformation of the apparel industry. Vital Mechanics has developed VitalFit, a solution that provides high-fidelity soft avatars for virtual fit testing and integrates easily into existing industry-wide workflows and 3D garment CAD software.

Accurate fit prediction can significantly reduce waste in the apparel industry. Real bodies, even within a size, differ dramatically due to factors such as age, gender, stature, and BMI; very large and very small sizes are rarely tested today. VitalFit enables inclusive designs by making it easy to test with a diverse set of soft avatars.

Realistic tissue properties are essential for high fidelity soft avatars. Based on more than two decades of research at the University of British Columbia on soft tissue simulation, contact, and friction—the first to measure the distribution of soft tissue properties of real human participants using a novel tissue probe—Vital Mechanics can construct soft avatars directly from 3D body scans, or even from 1D tape measurements for ease of use. VitalFit uses advanced computational models of the body, the garment, and contact to predict key fit attributes such as garment ease and tissue compression.

VitalFit’s scalable cloud architecture offloads compute-intensive fit simulations to their servers so there is no need for an additional investment in computers. 3D fit tests can be run at different stages of the product life cycle, and results accessed securely from any Internet browser by authenticated users. This facilitates communication within a team working remotely, and collaboration between retailers, brands, and vendors. VitalFit is accessible through a well documented web API that can be integrated with existing 3D workflows. For example, using Vital Mechanics’ VitalFit Bridge plugin, garments designed in Browzwear’s VStitcher can be uploaded to the VitalFit service with a few mouse clicks.

Vital Mechanics is currently rolling out the virtual fit testing service to a select group of customers in 2022. They hope to unlock new value across the entire product life cycle, potentially reducing both pre-production waste and deadstock, and increasing speed to market.

For more information, contact [email protected].

3D Virtualization for Retailers and Brands at IEEE SA

The 3DRC Grand Challenge is a joint initiative of the 3D Retail Coalition (3DRC), IEEE SA, and PI Apparel for the retail/apparel industry to explore technology applications and standards enabling next-generation consumer experiences. The project originated with support from the IEEE 3D Body Processing (3DBP) Industry Connections (IC) activity, a collaborative effort to assess standards opportunities intersecting emerging technologies to enhance industries such as retail, medical, health and wellness, and sports and athletics. Participation in 3DBP subgroups and activities is welcome.

Learn more about the 3DRC Grand Challenge and the 3D Body Processing IC Activity.