What Do You Really Mean?

Language is both ambiguous and important in this fast-paced and changing artificial intelligence (AI) market. The terms AI, machine learning (ML) and deep learning (DL) are often used interchangeably, yet each term has its own properties. Let’s venture into the jungle of technical definitions and ethical perspectives to try to understand at a high level what the relationships and differences of AI, ML, and DL are and how these technologies are influencing medical research.

The AI Jungle

AI became a breakthrough technology in a field of computer science that was conceived in the 1950s, with the purpose of mimicking human intelligence by the end of the 20th century – a broad and bold goal. The objective was to give computers the ability to perform tasks that require intelligence such as understanding speech, recognizing objects, abstract reasoning and learning from experience rather than being programmed. It did not get to the finish line. In the 1960s, scientists became interested in developing machines that learn from data. In the 1970s and 1980s, another breakthrough technology emerged–artificial neural networks, which are interconnected systems that are inspired by biological neural circuits.

With the development of neural networks, the creation of open source tools and standards to facilitate training of the algorithms, as well as the use of graphics processing units (GPUs) that provide computing power to process the data, and the availability of large amounts of data generated by digital technology, the internet of things (IoT) and smartphones, AI has now re-emerged at a more impactful level.

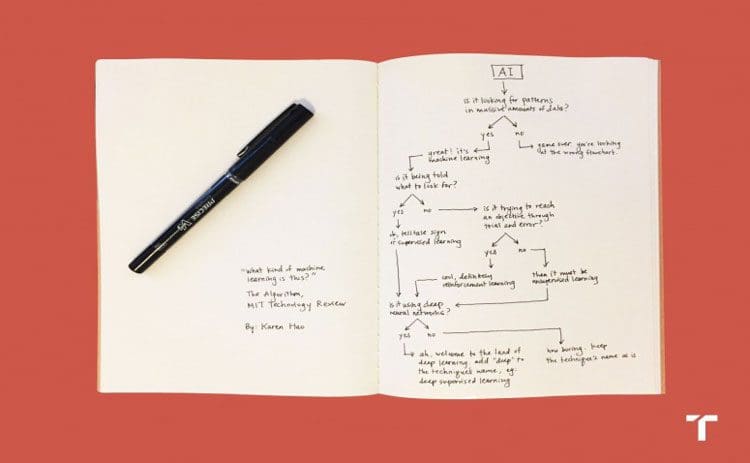

Machine Learning

Machine Learning is a subset of AI. From a mathematical perspective, supervised machine learning, the most common form of machine learning, can be defined as a nonlinear optimization problem. Using ML, you have confidence that the problem identified is solved to its best solution. Basically, it allows the computer to make decisions without being programmed, giving the computer the ability to learn/ make decisions without human intervention. Do you remember HAL in “2001: A Space Odyssey”? “I’m sorry Dave, I’m afraid I can’t do that”? Hollywood saw it coming.

Deep Learning

Deep Learning, a subset of ML, uses artificial neural networks and is revolutionizing AI today. DL needs lots of data to succeed. Now that we have access to a lot more data, DL’s capability is revolutionizing healthcare and will help uncover new ideas and move research, diagnosis and disease intervention and prevention to higher levels of confidence. By utilizing the capabilities of ML and DL, AI will be a revolutionary tool not only for the health science sector, but many sectors in the future.

The Rush to Improve Outputs and Speed

A key component of an AI project’s success is machine training, and many companies are now rushing to improve training techniques; however, there are certain pain points built into the current process. The training process consists of computer searches initiated at a random starting point. The process requires multiple searches, each initiated at a different random starting location, to prove the probability of the outcome because the current tool’s stochastic gradient descent – which is a method of searching using random samples — is not repeatable and does not offer a deterministic (provable) result.

Standards Conformance

Because AI is still evolving, the need for standards to facilitate access and control costs is being explored for both hardware and software applications. A leader on the software side is sponsored by the Khronos Group; they have formed a consortium and developed the Neural Network Exchange Format (NNEF) Standard.

On the hardware side, there are three main leaders in the race to standardize. They include OpenCAPI (Open Coherent Accelerator Processor Interface), CCIX (Cache Coherent Internet Accelerator), and Gen-Z. It seems that each prospective consortium has its own uniqueness, yet there is also overlap in capabilities. The goal of each is the same: to simplify communication and increase speed and reduce system complexity.

Will Robots Take Over?

Many concerns have come forward about the utilization of AI. There isn’t a scarcity of Hollywood movies that depict dystopian scenarios brought about by intelligent machines. Thankfully, those films are still in the science fiction genre; nevertheless, the issue of AI for good and the ethics around it are core discussions for world leaders. Standard discussions at all levels in all industries worldwide run rampant – both pro and con – to mold our next generations of science and conduct. For example, the United Nations is evaluating the role AI and ML can play in helping to achieve the 17 Sustainable Development Goals (SDGs). At this point in time, with no clear direction, concern abides.

For example, AI can be used with satellite imagery to monitor crop yields, deforestation, and track livestock. It can be used to monitor and predict disease outbreaks and provide medical diagnostics in remote and underserved areas. AI can be used to improve efficiency, optimize resource usage and accelerate progress towards the SDGs.

The bottom line is a commitment to the responsible use of data by all for all.

To learn more, listen to our latest Cutting Edge Technologies for Life Sciences Podcast below. “AI vs ML: From Bench-to-Bedside, A Technical Insider’s Perspective” features Maria Palombini, life sciences practice lead, IEEE Standards Association in an interview with Nate Hayes, chairman, founder, and CEO, Modal Technology Corporation, and Arun Shroff, director of technology and innovation, Star Associates.