Highlights

- Technology that can predict mood or simulate emotion has the potential to impinge on the outcomes of our lives, underlying the need for an ethics standard for empathic AI.

- Currently under development, the IEEE P7014 standards project is focused on five key aspects of empathetic AI ethics.

- In parallel to the standard development, the xplAInr project has been created as a toolkit with a focus on empathic AI and has become increasingly more generalizable to any autonomous and intelligent system.

How do you feel about machines that can read your emotions?

The IEEE P7014™ Working Group is writing a Standard for Ethical considerations in Emulated Empathy in Autonomous and Intelligent Systems. Our work so far has led us down a wide network of ethical and technical rabbit holes, some of which are unique to this new field of “empathic AI” and others are more general. Here are some of the most salient issues, developments, and outcomes of the group’s work, and we invite your participation.

The Empathic AI Challenge

Your shifting emotional states are fundamental to how you live and engage with the world. They are influenced by—and in turn apply influence to—your behavior, decisions, thoughts, and relationships. Thus, any technology you encounter that can predict mood or simulate emotion has the potential to impinge on the outcomes of your life and the lives of those around you.

The development of an ethics standard for empathic AI is an exploration into uncharted territory. The underlying science of affect and emotion remains controversial, with disagreement on some of the most fundamental aspects, such as what an emotion is in the first place. What’s more, the technical field of affective computing, emotion recognition, and empathic AI – the world has yet to agree on a name for it – is relatively new and changing fast. On top of that, the alignment of standardization and ethics is itself a nascent and knotty challenge.

After over three years of collaboration, the IEEE P7014 Working Group is still debating which socio-technical considerations are unique to empathic AI. However, we are in broad agreement that this technology engenders potential harms at a whole new level of intimacy and impact, deserving of dedicated cautions, rules, and guidance.

Key Aspects of Standardising Empathic AI Ethics

Currently under development, much could change before the final publication of the IEEE 7014 standard, let alone in the coming years as the standard, and related technology ethics efforts, race to keep up with innovation. However, a core set of issues and norms are now at the center of our focus. Some of these topics have also reached beyond the group, connecting with published papers, new tools, public talks, and other outputs.

1. Framework Selection

What is the best angle of approach for writing this standard, or indeed for producing highly ethical empathic systems?

We can start by considering underlying frameworks of moral philosophy (e.g. utilitarianism, deontological, or virtue ethics) or existing ethical guidance frameworks such as the UN’s Universal Declaration of Human Rights and the IEEE Ethically Aligned Design. Going further, we must also consider how to structure and position the content of the standard, such as by;

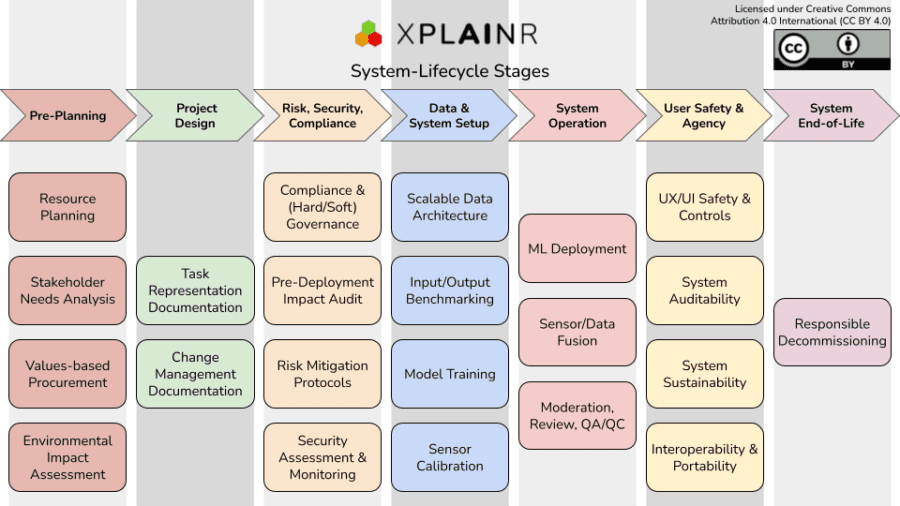

- Laying out a typical AI development lifecycle, or;

- Enumerating the risks and issues to address, or;

- Classifying the different stakeholders related to the system, or;

- Leading with instructive use cases.

All of the above frameworks can be relevant to, and influence the ultimate shape of, the standard. All come with their concerns. We have been grappling with everyone.

Learn more:

- Ethical Concerns: An overview of Artificial Intelligence System Development and Life Cycle – by IEEE P7014 members, Hassan Al Shazly, Angelo Ferraro, and Karen Bennet.

- Developing Use Cases to Support an Empathic Technology Ethics Standard – by IEEE P7014 members, Randy Soper, Karen Bennet, Pablo Rivas, and Mathana.

2. Handling Intimate Data

Moving beyond existing concepts of “personal” data, such as name and address, empathic AI delves into our inner emotional lives, broaching uniquely high levels of intimacy. Thus, our group is collaborating on norms and guidance for appropriate levels of data privacy, meaningful consent, and data-usage limitation, all with due consideration for corollary outcomes such as potentially stifling accessibility, interoperability, business, and innovation.

3. Culture and Diversity

When trying to ensure that empathic AI is built with the broadest and fairest cultural considerations possible, it is essential to analyze the unique and heightened cultural aspects that empathic AI entails. Emotions are understood and expressed differently across all dimensions of our diverse human population, with greatly varied personal and societal outcomes. At the same time, as we have seen from notorious examples of bias in related systems such as facial recognition, there are underlying biases and limitations to the human data and emotional models on which affective-computing systems are built.

Learn more: Risks of Bias in AI-Based Emotional Analysis Technology from Diversity Perspectives – by IEEE P7014 Vice-Chair, Sumiko Shimo.

4. Affect and Rights

Consider the confluence of, on the one hand, widely-recognized human rights, such as the right to freedom of thought, conscience, and religion, and on the other hand, the potential for empathic technologies to interact with our underlying (and often unintended or nonconscious) affective states. To what extent are our feelings already protected by our rights, or require new rights-based protection? How can this protection be facilitated?

Learn more: Affective Rights: A Foundation for Ethical Standards – by IEEE P7014 member, Angelo Ferraro.

5. Transparency and Explainability

Any technical system capable of societal or ecological impact demands appropriate levels and methods of transparency. But here again, empathic AI deserves special attention. Considering the scientific, technical, and philosophical complications outlined above, this explainability challenge is far from trivial. System makers carry the burden of explaining to any potentially affected stakeholders how and why the system selects and filters affective data, generates models of affective states, and subsequently acts on those models.

In parallel to the standard development, the xplAInr project has been created as an explainability toolkit that started life with a focus on empathic AI but has become increasingly more generalizable to any autonomous and intelligent system. It follows a typical AI system lifecycle and invites all stakeholders to explore methods for a greater understanding of the system and its potential impacts. We have been developing xplAInr with each stakeholder group in turn, starting with system developers, then consulting policy-makers and investigators, and then increasingly involving end-users and wider society.

Get Engaged with IEEE P7014

While any sufficiently advanced technology can engender complex ethical discussion, there is something particularly personal about empathic AI. Everyone has a stake in how we use this technology to advance the wellbeing of planet and people, and avoid using emotions as a tool for manipulation and exploitation.

We welcome you to guide us toward a more empathic future:

- Learn about IEEE P7014 Working Group – and join the group!

- Explore the overarching work of the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems and Ethics in Action.

Author: Ben Bland, IEEE P7014 Emulated Empathy In Autonomous and Intelligent Systems Working Group Chair