Q&A with Thad Starner, director/founder of Georgia Tech’s Contextual Computing Group

What is your vision for how technology will benefit humanity?

We work on intelligence augmentation in my group at Georgia Tech. We are trying to make intelligent assistants that are so fast to access and so convenient that they can be used all the time without much thought. Let me give an analogy using binoculars and eyeglasses.

Both binoculars and eyeglasses help you see better, but binoculars are not part of you. They have a distinct sense of “other” about them. You bring binoculars to your eyes when you need them to look at something in the distance, and then you put them back down when they are not needed. You don’t tend to continue looking through them for extended periods of time because they require too much concentration and physical effort to hold in place. Binoculars also require some thought and time to use. Each time you bring them to your eyes requires time to position them so you can see through them; and, they often require manual refocusing when moving from one target to another. The time between your intention to use binoculars and when you can actually bring them to your eyes and use them is on the order of several seconds.

Compare binoculars to eyeglasses or sunglasses, which also augment your ability to see. Eyeglasses are worn on the face, and, once you are wearing them, you do not think about them. They become part of you, and you just see through them, seemingly without any effort. Therein lies an interesting paradox: Bringing technology closer to the body helps get it out of the way.

Wearable computing, when created carefully, helps get technology out of the way. The technology almost becomes part of us, like eyeglasses. When we add intelligence to wearable technology, the possibilities become quite exciting. Can we make intelligent assistants that are more like eyeglasses than binoculars? Can we make intelligence augmentation which you just “think through” in much the same way you see through eyeglasses? If that is possible, we can augment users’ natural abilities on a different level than we have ever seen. I believe we can “level up” humanity with this technology, more than we already have with mobile phones.

How would you describe “my mama’s wearables” in relation to the leading-edge wearables we’ll learn about in your session?

The most successful wearable computers to date are digital music players, mobile phones and Bluetooth headsets. These devices meet my definition of wearable computing because they support a primary task being performed in the real world, often while the user is on the go, instead of being the primary task themselves. For example, an MP3 player provides background music while walking to work, and a Bluetooth headset allows conversation with a spouse to determine what cat food to buy while grocery shopping. Fitness trackers keep track of goals while working out or encourage wearers to change everyday behaviors, such as taking the stairs instead of riding the elevator. These are the devices “your mama” might use.

What Melody Jackson and I will be presenting are uses of wearables and interfaces for them that are, we hope, unexpected and pre-competitive—before the stage where a startup might be formed around the idea. For example, we will show an earbud-based speech interface that does not require sound and a way to recover imagined sign language directly from brain signals. Our passive haptic learning devices help wearers learn manual skills like playing piano, typing Braille or keying Morse code without attention. Melody and I hope to have a live demo of a wearable computer that helps working dogs better communicate with humans.

What was it like to be wearing a computer in the mid 1990s?

I first tried to make a wearable computer for everyday use in 1990, but it was too difficult. I began actually wearing a head-worn, display-based computer as an intelligent assistant in my everyday life in 1993. It was well before the PalmPilot made PDAs popular and before the release of the Apple Newton. I had taken a year off school to work with the Speech Systems Group at BBN under John Makhoul. At that time, BBN was much like Bell Labs, at least in my mind. BBN is famous for many firsts in computing—I used to work on the same floor as the guy who invented the @ sign for email addresses. However, even at BBN, some people could not understand why I was wearing a computer. I was on the employee shuttle one day, and one of the engineers asked me, “Why would you want a mobile computer?” Not, “why do you want a head-up display display?” Or, “why do you want a one-handed keyboard?” But, “why a mobile computer?” I was flabbergasted! It seemed so obvious—on-the-go navigation, messaging, email, reading books, mobile gaming and writing, all possible while I was riding the employee shuttle bus or walking down the street. But how could I explain how compelling these things are to someone who thinks of computers as devices that are stuck behind desks? Now many of the things I do with my wearable are commonplace on smartphones, which makes it easier to communicate what is possible with a wearable and why I find a head-up interface to those functions compelling.

It being BBN, though, there was a group of engineers who immediately had plans for my wearable. In fact, the first day I wore it to work, I was stopped in the hallway by a team researching wearables to help lawyers do cross examinations in court. I had no idea such research was underway. The team asked to borrow my wearable for the day. They tore it apart to see how I had made it, put it back together and returned it to me the next day. I can’t believe I actually gave my machine to them—it had taken me years and a lot money (for a student) to make a reasonable system, but I have a lot of respect BBN’s engineers. They are really sharp people.

Going back to school with the wearable was interesting. Some people thought I was wearing the machine just to get attention; others thought it was interesting but had no idea how much it improved my life as a student and researcher. I remember practicing a talk in front of my masters advisor’s research group. I was wearing the display showing my talk outline and a few transition sentences where I had rough spots in previous rehearsals. It went well. With the notes on the HUD (head-up display), I was confident and composed and kept my head up, engaging my audience as opposed to looking down at my transparencies all the time. However, at the end, one of the senior Ph.D. students came up afterwards and said, “That is the best talk I ever heard you give, but why were you wearing your computer?”

Most people around me did not realize how slick one can get with a head-up display. When having a research discussion or a teaching session with undergraduates, I would often construct my sentences such that I could look up the information I needed to complete the sentence in real-time on the wearable. One time in class I failed miserably. The professor asked, “What did we say was the importance of deixis?” (Deixis is basically pointing gestures.) Being overly cocky because I knew I had all of my class notes quickly accessible with my one-handed keyboard and HUD, I raised my hand and started, “At the beginning of the semester, we said that deixis was important because … uh … um … I’ll have to get back to you on that. I got myself into the wrong mode …,” which caused the rest of the class to break out in laughter. However, one of the professors who was taking the class for fun leaned over and said to me, “OK, now I get it. You pull up knowledge that quickly all the time, don’t you? Now I want one [wearable computer]!”

How long did it take before your friends and family stopped thinking it was unusual?

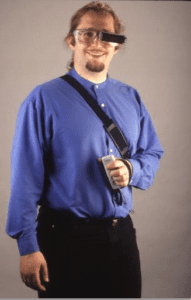

In general, it takes about two weeks before colleagues stop noticing it. In other words, after two weeks, if people pass me in the hallway and you ask them afterward whether I was wearing my computer, they have a hard time remembering. Much like eyeglasses or clothing, the technology disappears over time and becomes part of you, even when it looks like the picture below.

What is “subconscious learning”?

I prefer the term passive learning because it is really hard to test whether learning is conscious or not. Passive learning is more caught than taught. In our case, we are showing passive haptic learning (PHL) of piano. Our PHL gloves teach the “muscle memory” of how to play piano melodies without the learner’s active attention. The fingerless gloves are equipped with vibration motors at each knuckle. As a mobile MP3 player plays each note of a song, the PHL gloves tap the finger that corresponds to the respective piano key. In our testing, users can learn the first 45 notes of simple songs like “Amazing Grace” in 30 minutes while concentrating on reading comprehension exams. Similar gloves can passively teach a wearer how to type and read Braille in four hours without active attention. The effect doesn’t just work with gloves. Using the bone conduction transducer on Google Glass, we have passively taught how to recognize and produce Morse code. We’ve also shown some work in passive haptic rehabilitation, where the gloves have helped improve hand sensation and dexterity for people with partial spinal cord injury. We are now trying to determine if the gloves might help with stroke recovery, as well.

What led you to start your work with the service dogs?

Like much of my research with Melody, it started with a joke. One of my friends makes custom dog vests on Etsy, so I invited her to my lab to check out our work with electronic textiles. We thought it would be fun to make dog vests that light up differently depending if the dog was walking, running or lying still. Before she reached my office, she wandered into our lab and started talking with Melody, who trains service and agility dogs as a hobby, and Clint Zeagler, who works on electronic textiles. When I finally discovered where my guest was, I found them having a lot of fun joking around about different types of crazy vests we could make for different situations. Someone said something like, “Wouldn’t it be great if your dog could simply tell you what was going on instead of barking?”

“Errr, … why couldn’t we do that?”

A collaboration was born.

What has been among your most surprising discoveries so far?

I’m still surprised passive haptic learning works. It feels like there should be so much we can do with the idea, which is why we’ve been starting up research collaborations around the world on the topic.

What role might standards and IEEE have in bringing about this next wave of wearables that you envision?

It already has. IEEE 802.15™ Working Group for Wireless Specialty Networks (WSN) started as the Ad Hoc Committee for “Wearables” Standards led by Dick Braley at FedEx in 1997. FedEx had seen one of my demos of wearable computers earlier and had decided that they wanted to create wearables for their couriers and drivers to allow easier tracking of the packages and enable them to better focus on the customer. When I said that there was no appropriate wireless technology for connecting the different components of the wearable to each other or to the truck, they asked what was necessary to make that technology happen. Not thinking they would actually follow through, I told them that a typical thing was to establish a specification and a standard for the technology, much like had been done for IEEE 802.11™[1]. Next thing I know, I was invited to the standards committee (but unfortunately had to decline to finish my dissertation).

There is still a lot of work to do. Bluetooth LE is much better than the original IEEE 802.15 spec, but I suspect we can still get an order of magnitude better in power performance through a hybrid of analog and digital technology. Standards for power scavenging, transmission and charging still have a long way to go, especially for low-power devices and sensors. The same can be said for standards for security and privacy for ubiquitous computing (Internet of Things) in general.

What are you hoping to learn during your time at SXSW?

I’m always looking for creative ideas and technologies to mix together in new ways, and these events are a great excuse to explore work outside of one’s niche area—and spend some brainstorming time with colleagues you might see in person only once every few years.

Not Your Mama’s Wearables is included in the in the IEEE Tech for Humanity Series at the annual SXSW Conference and Festival, 10-19, March, 2017. In this session, Thad Starner, Director/Founder of Georgia Tech’s Contextual Computing Group will provide a vision for wearable computing. Learn how Thad’s leading-edge wearables enable subconscious learning and leverage AI and machine learning to enable communication between people, dogs and dolphins!

[1] IEEE 802.11™, IEEE Standard for Information technology—Telecommunications and information exchange between systems Local and metropolitan area networks—Specific requirements – Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications