Artificial Intelligence (AI) is changing the way we make critical decisions impacting the lives of individuals including decisions made throughout the legal system.

AI offers the potential for more uniform, repeatable, and less biased decisions, but that is far from automatic. AI systems can also codify the biases, conscious and unconscious, of system builders as well as ingest biases reflected in historical training data used for machine learning algorithms, cementing these biases into opaque software systems. AI systems can also be inaccurate for many other reasons than bias. They need to be deployed in a socio-technical context in which sound evidence of their fitness for purpose is demanded, in which there are incentives to identify and correct problems and in which stakeholders are empowered with the information they need to advocate for their own interests.

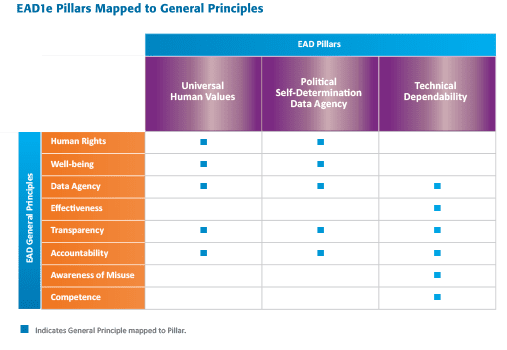

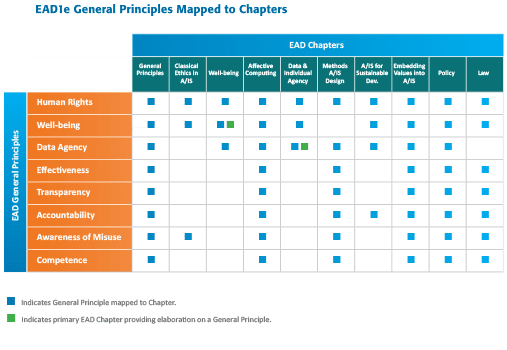

The key question of how we build fair and trustworthy AI-based systems is an essential one. In Ethically Aligned Design (EAD1e), “A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems”, The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has laid out a set of four trust conditions to guide informed trust of autonomous and intelligent systems (A/IS):

- Effectiveness – A/IS creators and operators shall provide evidence of the effectiveness and fitness for the purpose of A/IS.

- Competence – A/IS creators shall specify and operators shall adhere to the knowledge and skill required for safe and effective operation.

- Accountability – A/IS shall be created and operated to provide an unambiguous rationale for all decisions made.

- Transparency – The basis of a particular A/IS decision should always be discoverable.

In this article, we discuss the role of sound evidence as a fundamental basis on which to base these trust conditions and especially, what constitutes a sound understanding and evidence of the trust condition of Transparency. Although AI-based decision making is impacting many other critical areas such as hiring, credit, housing and medicine, here we ground our discussion in examples from the legal system.

Transparency for AI Systems

Transparency for AI systems is composed of two fundamental elements:

- Access to reliable information about the AI system including information about the training procedure, training data, machine learning algorithms, and methods of testing and validation

- Access to a reliable explanation calibrated for different audiences – i.e. why an autonomous system behaves in a certain way under certain circumstances or would behave in a certain way under hypothetical circumstances.

Transparency can provide a “right to explanation” or a meaningful justification of an AI’s decision. What constitutes a sound explanation and what types of explanation are appropriate can vary for different stakeholders. An explanation that is clear to experts might be opaque to non-experts. All stakeholders need an explanation of the decision-making process, provided in an accessible manner.

For both experts and non-experts, we must ask how we ensure that the evidence itself is indeed sound and that the explanations based on this evidence are understandable. Sadly, in many instances, stakeholders, especially non-experts, are offered marketing claims or pseudo-scientific jargon instead of sound evidence. In the legal system in particular, it can often be the case that judges, juries, lawyers, and citizens do not have the tools to tell the difference.

Developers of an A/IS system might assert that the conditions of “Transparency” are satisfied, but without a basis in sound evidence, this can be an illusion. Thus, a discussion of what constitutes sound evidence is essential as a fundamental prerequisite for transparency and the other trust conditions such as effectiveness, competence and accountability.

It is important to collect sound evidence throughout the lifecycle for each of the four trust conditions of an A/IS system. In particular, transparency criteria can and should be applied to design, development, procurement, deployment/operation, and validation of AI-enabled systems. Different pieces of evidence may be made available to different groups of stakeholders or auditors as appropriate. For mature systems, development iterates through these phases over time, and transparency should also involve both initial testing and recurring audits, for example:

- Design: What requirements was the system designed to satisfy? What threat models were considered in the design?

- Development: Were standard practices for software development followed? What coding standards were required of programmers? What unit tests were run? Were code reviews performed?

- Procurement: Who made the decisions? On what basis? Following what evidence?

- Deployment/Operation: How was the system operationalized? By what personnel? What skills or certifications do they have that make them competent? What workflows are they using? Who designed these workflows?

- Validation: What types of internal testing have been performed? What types of errors were revealed by internal testing and how were they addressed? What types of errors were revealed after deployment? How were they addressed? Is there a need to revisit past decisions based on flaws discovered?

Effectiveness of AI Systems

One category of sound evidence relates to the effectiveness or accuracy of the outputs of a system. We can’t simply assume that the systems actually work as promised. Effectiveness, in this context, is to be more broadly understood than simple accuracy. It includes the general “fitness for purpose” of a system. Some examples of sound evidence of system effectiveness include:

- Testing and validation protocols and results (both during development and after deployment and operation)

- Compliance with technical standards and certifications

- Algorithmic risk assessment: an evaluation of the potential harms that might arise from the use of the system before it is launched into the world.

- Algorithmic impact evaluation: an evaluation of the system and its effects on its subjects after it has been launched into the world.

- Benchmarking studies — especially independent benchmarking studies.

Measures of effectiveness should also generally be readily comprehensible to all stakeholders. Only so will they really foster trust in the system.

Systems are often deployed to make decisions in cases where the “correct” answer is fundamentally unknown, such as predicting a future event like whether a defendant will commit another crime. It can be especially tricky to assess accuracy or effectiveness in such cases because systems like this, once deployed, can fundamentally alter future conditions. For example, deploying more police to an area where crime is predicted may naturally result in finding more crime because police are present to observe it.

It is concerning when the only people testing a system are those that have a vested interest in its success. For example, admissibility of probabilistic genotyping software systems has often been based on peer-reviewed articles where only developers or crime labs report results. Defense teams desiring to question results have been denied the opportunity to inspect the systems for flaws affecting their clients. Reviewers of peer-reviewed articles, however, simply decide whether the results presented in a paper are of interest to the scientific community. They do not exhaustively test the software implementation or examine it for flaws that could impact decisions in an individual case.

Forensic analysts are often unable to manually confirm the output of a probabilistic genotyping software system so little feedback exists to diagnose flaws. Even more critically, the case of the Forensic Software Tool developed by the Office of the Chief Medical Examiner in New York offers a cautionary tale of developers who under pressure correct flaws in ways that could produce incorrect results rather than investing the time and effort required to correct the flaw more completely.

Competence in Using AI Systems

Another category of sound evidence relates to the competence of those involved in the development, deployment, and assessment of systems. A credible adoption of AI into law requires the development of professional measures of competence in the use of AI.

Too often, there is little attempt to assess the skill level of those developing, using, or testing systems. Examples of sound evidence of competence could include certifications, years of experience, demonstrations of accuracy, conformity assessments, etc.

Studies have regularly shown humans’ inability to judge their own competence at using AI tools. For example, the Legal Track of the NIST 2011 Text Retrieval (TREC) study reported large discrepancies in participants’ own estimated recall as compared with their actual recall as measured by TREC. For example, one team estimated their recall on a particular task to be 81%, but their actual recall was 56% (a difference of 25%).

In 2016, a Presidential Council of Advisors on Science and Technology to the Obama White House produced a report (the PCAST report) in which they discussed the scientific validity of various forensic technologies.

Even for manual identification technologies such as bitemark analysis, they noted substantial problems with the way in which “experts” are deemed competent. They noted studies by the American Board of Forensic Odontology and others that demonstrated a disturbing lack of consistency in the way that forensic odontologists go about analyzing bitemarks, including even on deciding whether there was sufficient evidence to determine whether a photographed bitemark was even a human bitemark. There are many lessons here for AI-enabled systems, including the need for rigorous tests to demonstrate competency of key agents interacting with the system over its lifecycle.

Accountability of AI Systems

Consider that, today, if someone is jailed too long because their recidivism score was wrong, no one is held accountable. Sound evidence with respect to accountability could include:

- A comprehensive map of roles and responsibilities so that each material decision can be traced back to a responsible individual

- Established sound documentation that can provide the appropriate level of explanation to internal compliance depts, outside auditors, lawyers, judges, and the ordinary citizen.

The European Union is moving towards establishing sound accountability requirements with the draft regulation laying down harmonised rules on AI (Artificial Intelligence Act). The European Commission has proposed legal obligations for AI providers regarding data and data governance (training, validation, and testing data sets), technical documentation with a detailed description of what AI system documentation should contain, and record-keeping (automatic recording of AI system’s logs). Access to such collected information will make it possible to implement the Explainability postulated in almost every document on ethical and trustworthy AI.

Balancing the Interests of Multiple Stakeholders

The scope and degree of transparency required may differ depending on the audience. What is clear evidence or explanation to one group (e.g. source code) may be opaque, technical details to others. In the context of the legal system, important stakeholders include:

- Domain experts (lawyers, incl. judges, attorneys)

- Law enforcement

- Regulatory entities/agencies/certification bodies

- Individuals affected by A/IS system decisions (parties and participants in court proceedings, accused persons, convicted persons)

- Data scientist/developers/product owners

- Managers/supervisors/executives

- Privacy advocates and ethicists

- Citizens

- The general public

For people with an appropriate level of expert knowledge, information on the details of the AI/IS system operation (algorithm) may be available, under specified conditions. It is crucial to define the categories of information that may be disclosed (an overview might be sufficient) and the categories of experts who can have access to the more detailed information (available on demand).

Any explanation is a simplification of the full system. Larraraju et al express clear metrics for the quality of explanations including fidelity or the degree to which the explanation agrees with the full system, unambiguity or the degree to which the explanation isolates a single outcome for each case, and interpretability or the degree to which people can understand the explanation [Larraraju].

Fidelity can be measured by minimizing the amount of disagreement between the explanation and the full system. Unambiguity can be measured by minimizing the amount of overlap between explanation rules and maximizing the number of cases covered by the explanation. Interpretability can be measured by minimizing the number of rules, the number of predicates used in those rules, and the width of the number of cases considered by each level in the tree of decisions (for example, if X1 then Y1, If X2 then Y2, if X3 then Y3 could be of width 3).

Other desirable properties of explanations can be that they do not use unacceptable features (e.g. using race or gender in hiring decisions) or that they provide predictive guidance (e.g. if you had more experience in a specific category, then you would be more likely to be hired for this job in the future). Work continues to be done in the research community on the features of good explanations, and there is a natural tension between different features like interpretability and fidelity.

Similarly, there can be a natural tension between transparency and protection of intellectual property rights or other protection of methods. Tradeoffs commensurate with the stakes and impact of the applications are necessary. A high degree of transparency could be required for decisions in regulated areas like hiring, housing, and credit or public decisions like criminal justice or allocation of public resources. For lower stakes or private decisions, a lower level of transparency could be required. This duly limited disclosure of information needs to be sufficient to ascertain whether A/IS meet acceptable standards of effectiveness, fairness, and safety. Even for higher stakes decisions, there can be reasons not to require full transparency [Wachter]. One example is algorithms used to flag taxes for further human examination. Full transparency of the algorithms used to flag cases could lead to gaming of the system. It is worth noting however that in this example, flagging leads to further human examination and not automatic decisions.

Different stakeholders have different interests in the balance between transparency and protection of methods. It is important to recognize and highlight these tensions and resolve them through the normal societal processes of open discussion and resolution. In other words, the processes for defining standards for transparency, effectiveness, competence, and accountability should themselves be transparent. When life and liberty are at stake in criminal trials, the interests of defendants and the public to understand evidence presented at trial has generally been judged to be more important than the intellectual property rights of developers. Too often, the tradeoffs are resolved behind the scenes by programmers of automated systems rather than by domain experts and without discussion and input from relevant stakeholders.

Learn More and Get Involved

Given the four trust conditions for AI systems, we encourage judges, juries, lawyers and citizens to look beyond marketing claims or pseudo-scientific jargon and insist on sound evidence of effectiveness, competence, accountability, and transparency whenever AI-based results are used in a legal context.

Learn more about the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems and download the Ethically Aligned Design (EAD1e) report for free.

Authors:

- Gabriela Bar, IEEE Global Initiative Law Committee Member

- Gabriela Wiktorzak, IEEE Global Initiative Law Committee Member

- Jeanna Matthews, IEEE AI Policy Committee member